In the summer of 2019, I wrote my Bachelor’s Thesis with the title ‘Expression and Recognition of Emotion in Music Across Different Cultures’ in collaboration with the Music Lab and under guidance from Dr. Samuel Mehr (The Music Lab, Harvard University) and Prof. Dr. Ronald Hübner (University of Konstanz).

Abstract

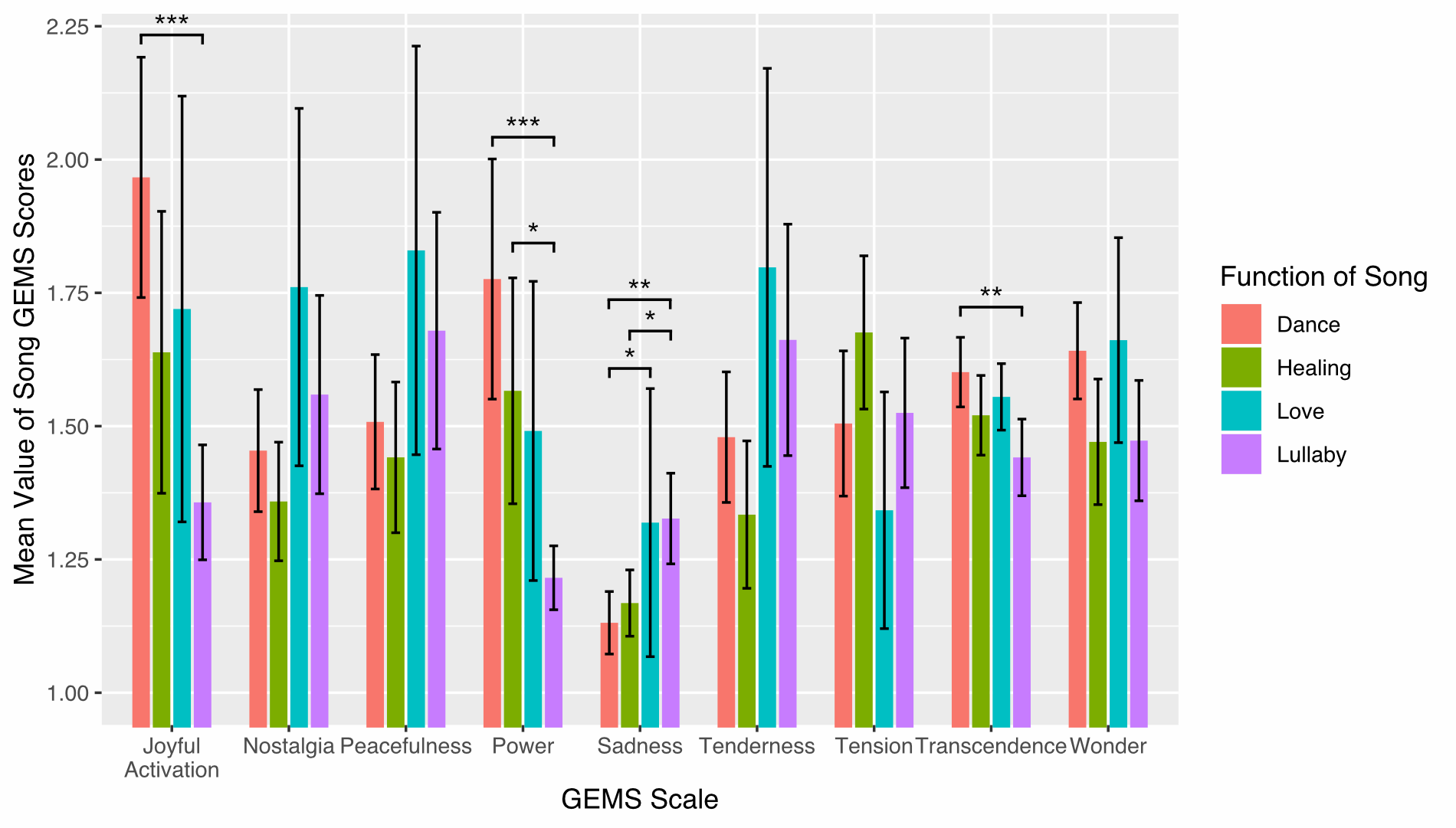

Can humans recognize emotions in music regardless of cultural origin? In this thesis I explore internet users’ recognition of emotions in music from small-scale societies they are unfamiliar with. Participants listened to music from 35 societies and rated the emotions they felt using the Geneva Emotional Music Scales (GEMS) (Zentner, Grandjean, & Scherer, 2008).

The results show that (1) emotion ratings were predictive of the behavioral functions of songs, above and beyond the predictive value of acoustic features alone; (2) acoustic features of songs were moderately predictive of emotion ratings; and (3) cultural distance between listeners was not a reliable predictor of variability in emotion ratings. Furthermore, song preference and high emotion ratings were strongly associated, such that enjoyment of a song was predictive of stronger evoked emotions and vice versa. Finally, analyses of the reliability of the GEMS suggest that this particular scheme for encoding musical emotion may not be ideal for non-Western music: Emotions evoked by music from foreign cultures might be less nuanced than the ones evoked by music from the same culture as the listener; moreover, the number of scales in the GEMS might be reduced to accommodate for this fact in the future. These findings suggest that emotion is fundamentally linked to the perception of form and function in music and raise the possibility of human universals in the perception of musical emotions.

Further Information

The data collection for the project was performed on themusiclab.org with the ‘What’s Your Musical Style?’ game. Over the course of the 10 weeks of data collection I was able to gather N = 2782 participants, quite a lot for a Bachelor’s thesis, but dwarfed by the > 100,000 participants the game has since attracted.

A graph depicting GEMS (Geneva Emotional Music Scales, see Zentner, 2008) emotion ratings for songs with different song functions. 95% confidence intervals as computed on a per song basis. Brackets highlight significant Tukey Contrasts with their corresponding significance levels (* p < .05; ** p < .01; ** *p < .001).

How the study I created for my thesis is advertised on the website.

Technology used

The experiment for the thesis was created using jsPsych. Data analysis and preprocessing was performed using R mainly utilizing the tidyverse and various smaller packages for specific use-cases. For preprocessing I used data.table in certain cases to save memory.